IBM's Triplex

AI BRAIN

Based on a ternary architecture

circa IBM 2100

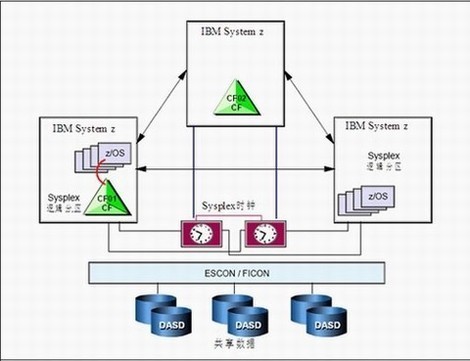

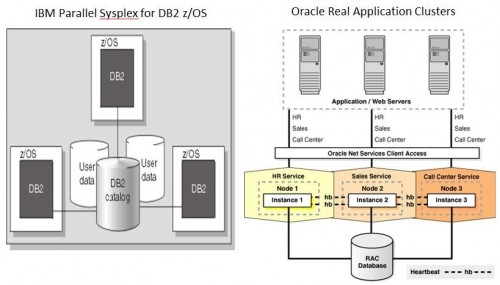

This information applies paradigm of threes to mainframe clustering technology. This information is written from a technical perspective. However, you may look beyond the technical terms for patterns of three. You will see a mainframe computer architecture based on paradigm of threes theory. The Triplex Mainframe cluster is the most advanced, sophisticated and powerful of all commercially available computer architectures. Over the years many terms have been used or modified for branding reasons, etc. Therefore, to better understand the internal workings of the Mainframe Parallel TriPlex listed are a variety of technical terms.

1. CPU - Central Processing Unit (for primarily a mainframe computer)

2. S/390 - Is another notation for System 390 and represents one IBM mainframe, now called the zSeries.

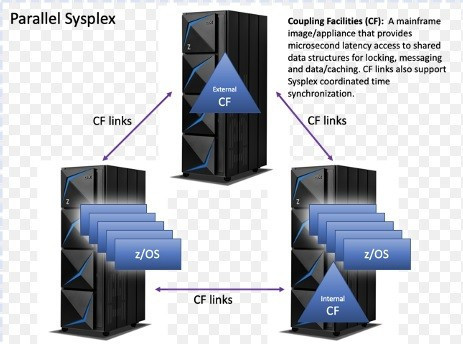

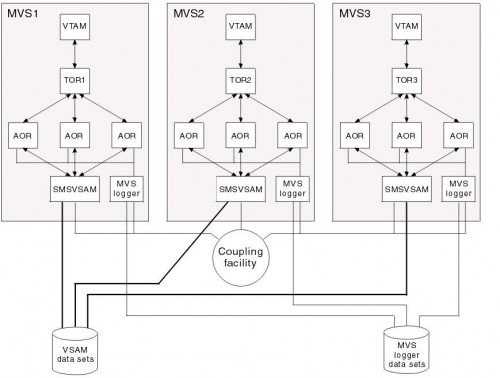

3. CF, CSF - Stands for Coupling Facility, or Coupling Support Facility. It is used to describe the inter-processor

communication between one mainframe and another in a clustered configuration, aka the BRAIN..

4. Buffer - A device or area used to store data temporarily and deliver it at a rate different from that at which it was received.

5. Cache - A fast storage buffer in the (CPU) central processing unit of a computer. In many cases it is called cache memory. This differs from cache storage, e.g. dated web pages.

6. Synchronous - Occurring or existing at the same time.

7. Asynchronous- Lack of temporal concurrence; absence of synchronism.

8. TriPlex - An IBM Parallel Sysplex Cluster that is composed on three processing nodes (three mainframes) demonstrating an application of paradigm of threes to mainframe clustering.

9. Trilateralism - The addition of another mainframe in parallel configuration with two others, three total.

10. Node - a mainframe computer in a clustered configuration.

Mainframe, parallel and clustered systems initially used for numerically intensive operations are now mainstream in the market space. The architectural elements of these systems span a broad spectrum that includes massively parallel processors that focus on incredible performance. To achieve this goal the Triplex contains futuristic multisystem data-sharing technology that allows direct, concurrent read/write access to shared data from all processing nodes in the parallel (trilateral) configuration. This occurs without degrading performance, data integrity or compatibility. Each node is able to concurrently cache shared data in local processor memory through hardware-assisted cluster-wide serialization and coherency controls. This in turn enables work requests associated with a single workload (i.e. business transactions or database queries) to be dynamically distributed for parallel execution on nodes in the Triplex cluster, based on available processor capacity. Through this technology the power of three mainframe clustered computers can be harnessed to work in a trilateral configuration with common workloads, taking the commercial strengths of the mainframe platform to improved levels of competitive price/performance, scalable growth with continuous availability. With the Parallel Triplex down time can be a thing of the past.

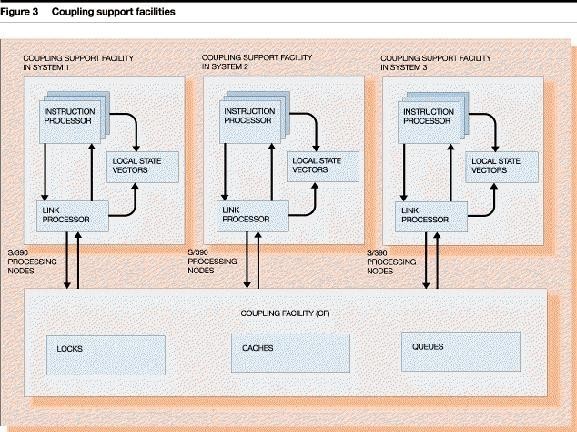

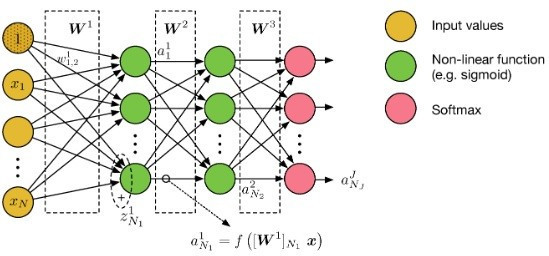

Coupling Support Facility is composed of software and interconnections that may be thought of as a rudimentary BRAIN. Specialized hardware provided on each of the three processing nodes (mainframes) in the Triplex cluster are responsible for controlling communication between the processors and the BRAIN. The specialized hardware required is depicted in Figure 3 above. It consists of mainframe EBCDIC instructions, speed of light links and link microprocessors. It also uses processor memory to contain local state vectors. These vectors are used to locally track the state of resources maintained in the BRAIN. These local state vectors avoid unnecessary communication observing critical state information.

The BRAIN provides several critical functions. The first of which is delivery. The BRAIN’s delivery provides the means by which a program sends commands to request locking, caching, and queuing actions. It supports both synchronous and asynchronous modes of command delivery. Synchronous commands are completed at the end of the mainframe instruction initiating the command, based on completely optimized, zero-latency transport protocols. Asynchronous commands are completed after the mainframe instruction initiating the command is ended. Along with the completion notice being sent to the operating system there is a notification technique that avoids a processor interruption.

The next area of the BRAIN is associated with secondary command execution. It executes secondary commands that are sent to the processing node as part of performing certain command operations. With one exception, the secondary commands directly update state information in the local state vectors to reflect updated resource status. A secondary command may, for example, store an invalid-buffer indication at a processing node to signal that the node no longer has the latest version of a locally cached data item.

The next area of the BRAIN is associated with local state vector control. It introduces a set of mainframe instructions that interrogate and update local state vectors. A DEFINE VECTOR instruction dynamically allocates, deallocates, or changes the size of a local state vector. The vectors are in protected storage and are only accessible assigning a unique token. This ensures that programs do not inadvertently overlay vectors for which they have no access authority. Instructions are provided to test and manipulate bits in the state vectors conveying the state of associated resources, and are described in the context of their use.

There are three kinds of local state vectors used:

(1) Local cache vectors are used in conjunction with the BRAIN's cache structures to track local buffer coherency.

(2) List-notification vectors are used within the list structures to provide notification of BRAIN's list empty/nonempty state transitions.

(3) List-notification vectors are also used by the BRAIN to indicate the completion of asynchronous command operations.

A fail Safe feature of the BRAIN includes hardware-assisted system isolation. This provides a system fencing function that isolates a failing system node from being able to access shared external resources during cluster fail-over recovery scenarios.

The BRAIN's paradigm of threes architecture was designed to support these caching protocols:

Directory-only cache. A directory-only cache uses a global buffer. This tracking mechanism provided by the Coupling Support Facility (aka the BRAIN) provides a logical, orderly and aesthetically consistent relationship for data, called buffer coherency. However, it does not store data in the cache structure. This allows read/write sharing of data with local buffer coherency, but refresh of dated or down-level local copies of data items happens by accessing the shared disk containing the data item, and all updates are written permanently to disk as part of the write operation.

Store-through cache. When using store-through cache, in addition to global buffer coherency tracking, updated data items are written to the cache structure as well as to shared disk. The directory entries for these data items are marked as unchanged, since the version of the data in the Coupling Support Facility matches the version kept as a fixed or hard copy on disk. This enables rapid buffer refresh of down-level local buffer copies from the global Coupling Facility cache. This avoids I/O's to the shared disk.

Store-in cache. When used as a store-in cache, the database manager (software, not a human) writes updated data items to the Coupling Support Facility cache structure synchronous to the commit of the updates. This protocol has additional performance advantages over the previous protocols as it enables fast commit of write operations. However, data is written to the cache structure as changed with respect to the disk version of the data. The database manager is responsible for casting out changed data items from the global cache to shared disk as part of a periodic scrubbing operation to free up global cache resources for reclaim. Further, an additional recovery responsibility is placed on the database manager to recover changed data items from logs in the event of a Coupling Support Facility (BRAIN) structure failure. Note below the variations and details of the paradigm of threes applied to mainframe clustering.

1. CPU - Central Processing Unit (for primarily a mainframe computer)

2. S/390 - Is another notation for System 390 and represents one IBM mainframe, now called the zSeries.

3. CF, CSF - Stands for Coupling Facility, or Coupling Support Facility. It is used to describe the inter-processor

communication between one mainframe and another in a clustered configuration, aka the BRAIN..

4. Buffer - A device or area used to store data temporarily and deliver it at a rate different from that at which it was received.

5. Cache - A fast storage buffer in the (CPU) central processing unit of a computer. In many cases it is called cache memory. This differs from cache storage, e.g. dated web pages.

6. Synchronous - Occurring or existing at the same time.

7. Asynchronous- Lack of temporal concurrence; absence of synchronism.

8. TriPlex - An IBM Parallel Sysplex Cluster that is composed on three processing nodes (three mainframes) demonstrating an application of paradigm of threes to mainframe clustering.

9. Trilateralism - The addition of another mainframe in parallel configuration with two others, three total.

10. Node - a mainframe computer in a clustered configuration.

Mainframe, parallel and clustered systems initially used for numerically intensive operations are now mainstream in the market space. The architectural elements of these systems span a broad spectrum that includes massively parallel processors that focus on incredible performance. To achieve this goal the Triplex contains futuristic multisystem data-sharing technology that allows direct, concurrent read/write access to shared data from all processing nodes in the parallel (trilateral) configuration. This occurs without degrading performance, data integrity or compatibility. Each node is able to concurrently cache shared data in local processor memory through hardware-assisted cluster-wide serialization and coherency controls. This in turn enables work requests associated with a single workload (i.e. business transactions or database queries) to be dynamically distributed for parallel execution on nodes in the Triplex cluster, based on available processor capacity. Through this technology the power of three mainframe clustered computers can be harnessed to work in a trilateral configuration with common workloads, taking the commercial strengths of the mainframe platform to improved levels of competitive price/performance, scalable growth with continuous availability. With the Parallel Triplex down time can be a thing of the past.

Coupling Support Facility is composed of software and interconnections that may be thought of as a rudimentary BRAIN. Specialized hardware provided on each of the three processing nodes (mainframes) in the Triplex cluster are responsible for controlling communication between the processors and the BRAIN. The specialized hardware required is depicted in Figure 3 above. It consists of mainframe EBCDIC instructions, speed of light links and link microprocessors. It also uses processor memory to contain local state vectors. These vectors are used to locally track the state of resources maintained in the BRAIN. These local state vectors avoid unnecessary communication observing critical state information.

The BRAIN provides several critical functions. The first of which is delivery. The BRAIN’s delivery provides the means by which a program sends commands to request locking, caching, and queuing actions. It supports both synchronous and asynchronous modes of command delivery. Synchronous commands are completed at the end of the mainframe instruction initiating the command, based on completely optimized, zero-latency transport protocols. Asynchronous commands are completed after the mainframe instruction initiating the command is ended. Along with the completion notice being sent to the operating system there is a notification technique that avoids a processor interruption.

The next area of the BRAIN is associated with secondary command execution. It executes secondary commands that are sent to the processing node as part of performing certain command operations. With one exception, the secondary commands directly update state information in the local state vectors to reflect updated resource status. A secondary command may, for example, store an invalid-buffer indication at a processing node to signal that the node no longer has the latest version of a locally cached data item.

The next area of the BRAIN is associated with local state vector control. It introduces a set of mainframe instructions that interrogate and update local state vectors. A DEFINE VECTOR instruction dynamically allocates, deallocates, or changes the size of a local state vector. The vectors are in protected storage and are only accessible assigning a unique token. This ensures that programs do not inadvertently overlay vectors for which they have no access authority. Instructions are provided to test and manipulate bits in the state vectors conveying the state of associated resources, and are described in the context of their use.

There are three kinds of local state vectors used:

(1) Local cache vectors are used in conjunction with the BRAIN's cache structures to track local buffer coherency.

(2) List-notification vectors are used within the list structures to provide notification of BRAIN's list empty/nonempty state transitions.

(3) List-notification vectors are also used by the BRAIN to indicate the completion of asynchronous command operations.

A fail Safe feature of the BRAIN includes hardware-assisted system isolation. This provides a system fencing function that isolates a failing system node from being able to access shared external resources during cluster fail-over recovery scenarios.

The BRAIN's paradigm of threes architecture was designed to support these caching protocols:

Directory-only cache. A directory-only cache uses a global buffer. This tracking mechanism provided by the Coupling Support Facility (aka the BRAIN) provides a logical, orderly and aesthetically consistent relationship for data, called buffer coherency. However, it does not store data in the cache structure. This allows read/write sharing of data with local buffer coherency, but refresh of dated or down-level local copies of data items happens by accessing the shared disk containing the data item, and all updates are written permanently to disk as part of the write operation.

Store-through cache. When using store-through cache, in addition to global buffer coherency tracking, updated data items are written to the cache structure as well as to shared disk. The directory entries for these data items are marked as unchanged, since the version of the data in the Coupling Support Facility matches the version kept as a fixed or hard copy on disk. This enables rapid buffer refresh of down-level local buffer copies from the global Coupling Facility cache. This avoids I/O's to the shared disk.

Store-in cache. When used as a store-in cache, the database manager (software, not a human) writes updated data items to the Coupling Support Facility cache structure synchronous to the commit of the updates. This protocol has additional performance advantages over the previous protocols as it enables fast commit of write operations. However, data is written to the cache structure as changed with respect to the disk version of the data. The database manager is responsible for casting out changed data items from the global cache to shared disk as part of a periodic scrubbing operation to free up global cache resources for reclaim. Further, an additional recovery responsibility is placed on the database manager to recover changed data items from logs in the event of a Coupling Support Facility (BRAIN) structure failure. Note below the variations and details of the paradigm of threes applied to mainframe clustering.

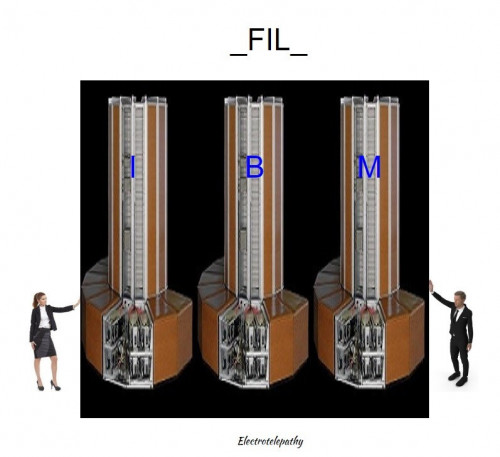

Triplex Supports Trilateralism

_FIL_ is a massive ternary optical supercomputer with a three dimensional, optical, triaxle chip called the Chrystal Chip. It is the ultimate fulfillment of IBM's Z(zetta) Series mainframes. This three mainframe supercomputer cluster is approximated to operate at speeds in excess of 3 zettahertz. It utilizes the electromagnetic spectrum with Atomic Thinking Units and Electropathy. However, the above depiction differs from the researched _FIL_ in that it was black and blue in color and had lights, similar to neon connecting the units at the top. Click this link for depictions of futuristic supercomputers. Note how many of them are black and blue with lights at the top, e.g. _FIL_ (like).